@DE PLAYER

20:00hrs

Admission: €7,-

Rotterdam, The Netherlands

| TGC #3 |

XPUB (x) |

|---|---|

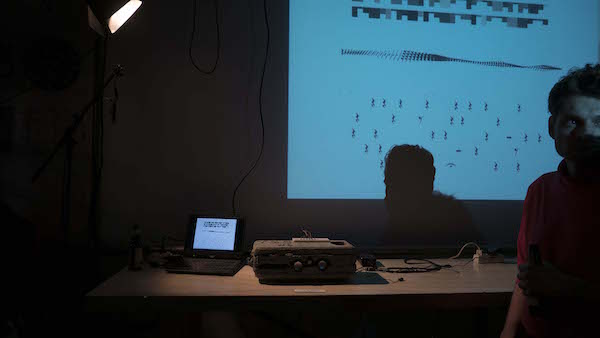

TGC #3 is the third issue of the TETRA GAMMA CIRCULAIRE series (an unknown audio magazine), initiated in 2015 by the polymorphic production platform DE PLAYER in Rotterdam. But it is also the second Special Issue of the Experimental Publishing (XPUB) programme of the Media Design Master of the Piet Zwart Institute (are you still following?). The TGC series is famous as a magazine because it has no limits nor exact face and appears in unexpected solutions and situations. TGC #3 fits right in. Concretely, very concretely, TGC #3 is in itself a particular kind of publishing platform (some may dare say a jukebox) experimentally engineered for sonic experiments, instruments, and installations. The first edition is limited to 12 copies, and to inaugurate its launch it is distributed with works created and compiled by the XPUB students.This evening, the new issue will be demonstrated in its dynamic applications and for the festivity we flew in some other nerdy sound makers and breakers. |

Experimental Publishing is a new course of the Piet Zwart Institute's Media Design Master programme. The concept of the course revolves around two core principles: first, the inquiry into the technological, political and cultural processes through which things are made public; and second, the desire to expand the notion of publishing beyond print media and its direct digital translation. The Experimental Publishing students who contributed to the development of TGC #3 are: Karina Dukalska, Max Franklin, Giulia de Giovanelli, Clàudia Giralt, Franc González, Margreet Riphagen, Nadine Rotem-Stibbe and Kimmy Spreeuwenberg. |

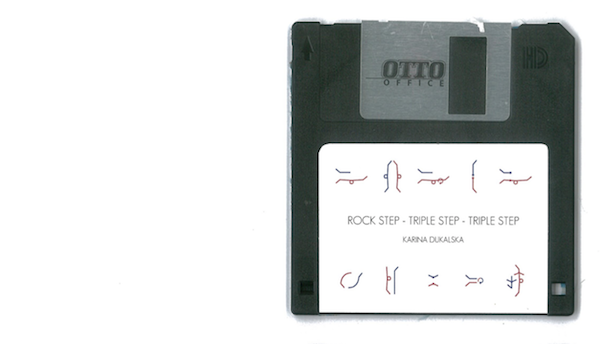

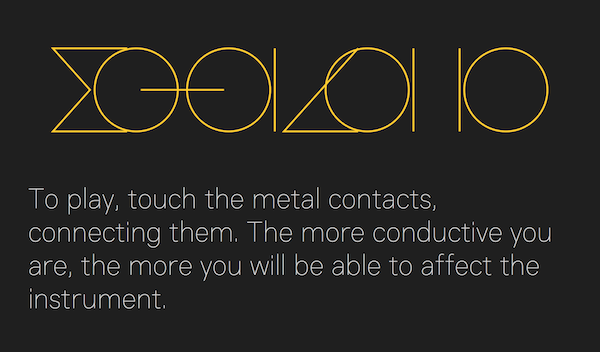

Rock Step - Triple Step - Triple Step

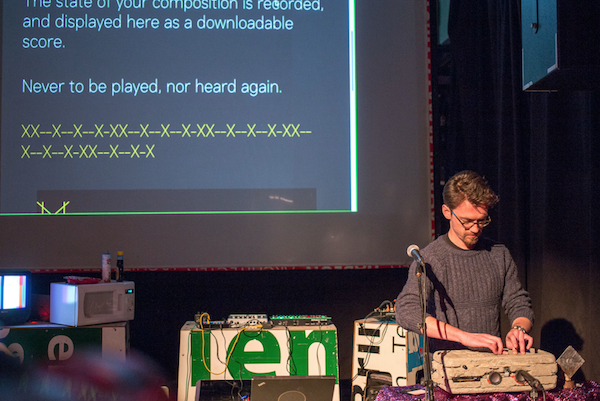

A chaotic software and hardware synthesiser and generative sequencer. Designed to explore improvisation, and musical interactivity. To play, touch the metal contacts, connecting them. The more conductive you are, the more you will be able to affect the instrument. The state of your composition is recorded, and displayed here as a downloadable score. Never to be played, nor heard again.

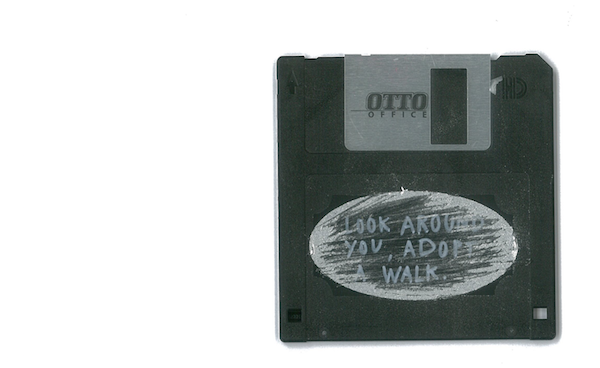

This is an audio-guide of an experiment of gait analysis, If you never heard about this term, gait analysis is the study of walking patterns as used by new surveillance biometric technologies. People are asked to walk following a series of spoken instructions. The walks are stored temporarily on a page where you’re invited to “adopt a walk” of another person. “Have you ever tried to identify someone by the way they walk? Surveillance technologies are using homogenic perception of human beings as a model for their mechanics.” With this experiment you’re suggested to observe characteristics of other walks and adopt them. In adopting a different walk do you become someone else? Will it be you or someone else’s identity that is detected by these surveillance algorithms?

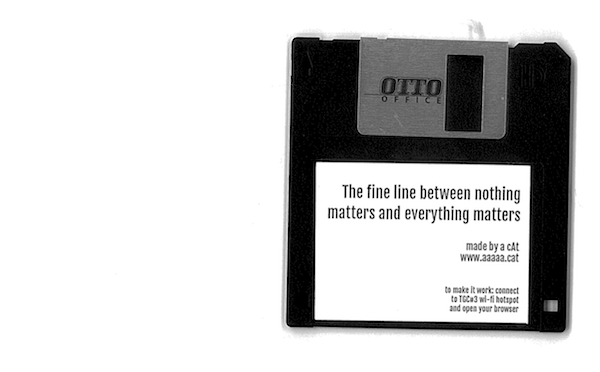

“The fine line between everything matters and nothing matters” The idea of this project is to represent this line digitally and sonorously, and let the audience play with it. seeking balance and breaking it. Harmonious sounds and the disruption of those. Experiencing how close from each other opposites can be.

This experiment analyses and measures data from people’s motion and explores how we humans become an extension of technology, adapting ourselves to immaterial environments by seeking information-control, performing sounds. CONCEPT Technology is providing us new ways to shape our perception of space, while at the same time it is transforming our bodies into gadgets. This is not only changing our spatial awareness but it’s also extending our senses beyond given nature. Moreover, control systems that regulate and command specific behaviours can be very practical tools to improve physical functionalities or translate its data. For instance, this experiment employs “Optical Flow” sensor which detects motion from image objects between frames, and “Open Sound Control (OSC)” which enables to exchange and format data from different devices, for instance, from Python to Puredata. Although the unique possibilities to improving human physical or cognitive limitations by plugging a body to an electronic or mechanical device are yet very hypothetical and might extend beyond our imagination, nevertheless technology is continuously transforming the abstract or fictional conception of “cybernetics” to a more realistic evidence. The communication between both automated and living systems is continuously evolving, upgrading and rising up more sophisticated engineered tools that might enable us to increase our knowledge, morphing our perception through deeper experiences. In this experiment, the potential for controlling data through motion on space while becoming independent of physicality, opens up new creative and pragmatic alternatives for facing both technological and communication constraints. BODY This body analyses human motion on space and detects it using “Opitcal Flow” in “Python”, using a series of predesigned multidirectional interpreters. These interpreters are made up of a series of points (intersections), forming a grid which intersects with movement. This is detected in form of numeric values, which are automatically transmitted and formatted to a graphic array in Puredata. This array arrange these values and generates a polygonal waveform based on these received coordinates (which numbers ranges between "x", having values from 0 to 10, and "y" from -1 to 1). This activates an “oscillator” object which defines the frequency of the tone, together with “metro” object, which time spans its duration in miliseconds, consequently iterating the audio (re-writting it in the display). The intersections and the graphic array (together with the entire Puredata patch) become an interactive notation system, while people become the instrument/tool that triggers it. WORK PROGRESS By exploring the connection between motion and sound, experiments have been performed through different software and tools, which has substantially strengthen the following additional material in this project. For instance, Kinect sensor and Synapse, which receives input data from Kinect and sends it out to Ableton or Max MSP, have been tested out. Similarly, motion detection was together explored with “color detection” in Puredata, which brought up more interesting alternatives. Sound recording and feedback loop was further tested with this method, though mechanically it was hardly accurate. Finally with “Optical Flow”, this work was reconfigured with a wider sense for interacting with data.

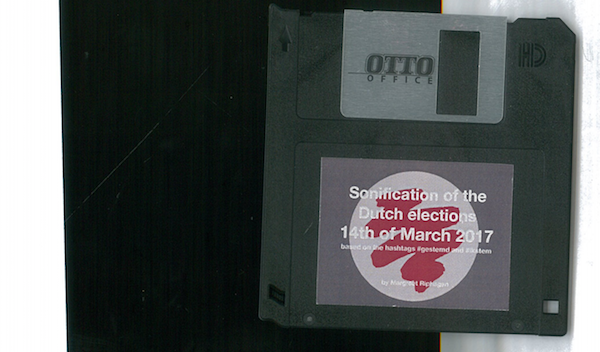

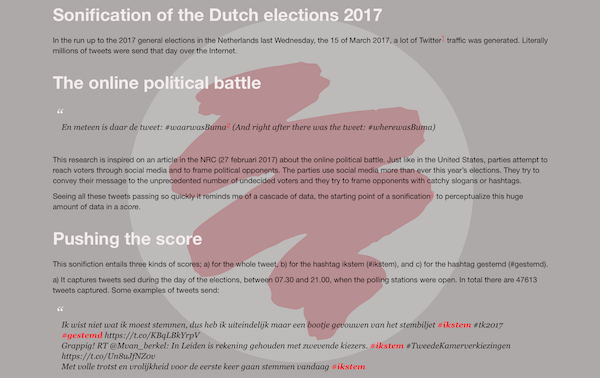

A sonification of the Dutch elections March 2017; based on the hashtags #gestemd and #ikstem In the run up to the 2017 general elections in the Netherlands last Wednesday, the 15 of March 2017, a lot of Twitter traffic was generated. Literally millions of tweets were send that day over the Internet. This sonification entails three kinds of scores; a) for the whole tweet, b) for the hashtag ikstem (#ikstem), and c) for the hashtag gestemd (#gestemd).

What you are hearing is a score made from the 'Watchlisting Guidance' table of contents using Pure Data. This document was written by the National Counterterrorism Center (NCC). You can access it here: https://theintercept.com/document/2014/07/23/march-2013-watchlisting-guidance/

Make a silence. “There is no such thing as an empty space or an empty time. There is always something to see, something to hear. In fact, try as we may to make a silence, we cannot.” (John Cage, Silence: Lectures and Writings)